Technology is touching every aspect of finance, transforming decades-old practices and redefining core tasks. In our Disruption in Financial Services series, we explore how new tools and technologies are changing the business of finance and reshaping how financial professionals do their jobs.

Disruption in Financial Services

Racist Robots? How AI Bias May Put Financial Firms At Risk

Artificial intelligence (AI) is making rapid inroads into many aspects of our financial lives. Algorithms are being deployed to identify fraud, make trading decisions, recommend banking products, and evaluate loan applications. This is helping to reduce the costs of financial products and improve their efficiency and efficacy. However, there is growing evidence that AI systems are biased in ways that may harm consumers and employees. As regulators turn their attention to the impact of financial technology on consumers and markets, firms that deploy AI may be exposing themselves to unanticipated risks.

Every day, thousands of people receive automatic fraud alerts from their credit card companies informing them of suspicious transactions. What many don’t realize is that behind those alerts lies an AI – an algorithm that searches and evaluates billions of credit card transactions, identifying normal spending patterns and flagging transactions that may be out of the ordinary. Fraud alert AI represents just one of the many ways in which AI is being woven into the day-to-day business of finance.

What is AI?

At its core, AI is simply the attempt to teach machines to imitate or reproduce human beings’ natural intelligence – the ability to recognize objects, make decisions, interpret information, and perform complex tasks.

The advantages of creating AI systems capable of performing functions like fraud monitoring, customer service, trading, and transaction processing are obvious. Well-designed and effective AI systems can reduce errors, increase speed, and cut costs by reducing the need for human workers. They can also provide firms with a competitive edge and enable faster growth and the development of new products.

However, AI is not risk-free. On the contrary, the fundamental structure of AI creates a host of risks and vulnerabilities.

How does AI work?

Attempts to replicate how human brains acquire and interpret information have been largely unsuccessful. Researchers have therefore turned to other methods to create effective AI. A traditional approach has been to use huge amounts of data to teach machines how to perform tasks.

Consider, for example, image recognition. Human toddlers can quickly learn to distinguish between pictures of cows and horses, but machines historically struggled to do so because the animals are too similar. However, researchers found that by showing algorithms millions of images of cows and horses – and telling them which was which – over time, they could train the algorithms to accurately tell whether a new picture showed a cow or a horse. This basic technique can be used to train applied AIs to perform a range of tasks. For example, to train a fraud monitoring AI, the algorithm can be provided with transaction data and told what a suspicious transaction looks like. After a while, it will be able to identify suspicious transactions based on the parameters it is given.

A more contemporary approach, known as machine learning, is to provide algorithms with basic programming and a huge trove of data – pictures of cows and horses, say, or credit card transaction data – and allow them to train themselves. Over time, the algorithm figures out how to group images into categories or identify transactions that do not fit the general pattern, thus teaching itself to recognize images or identify fraudulent transactions.

Big breaches and biased bots

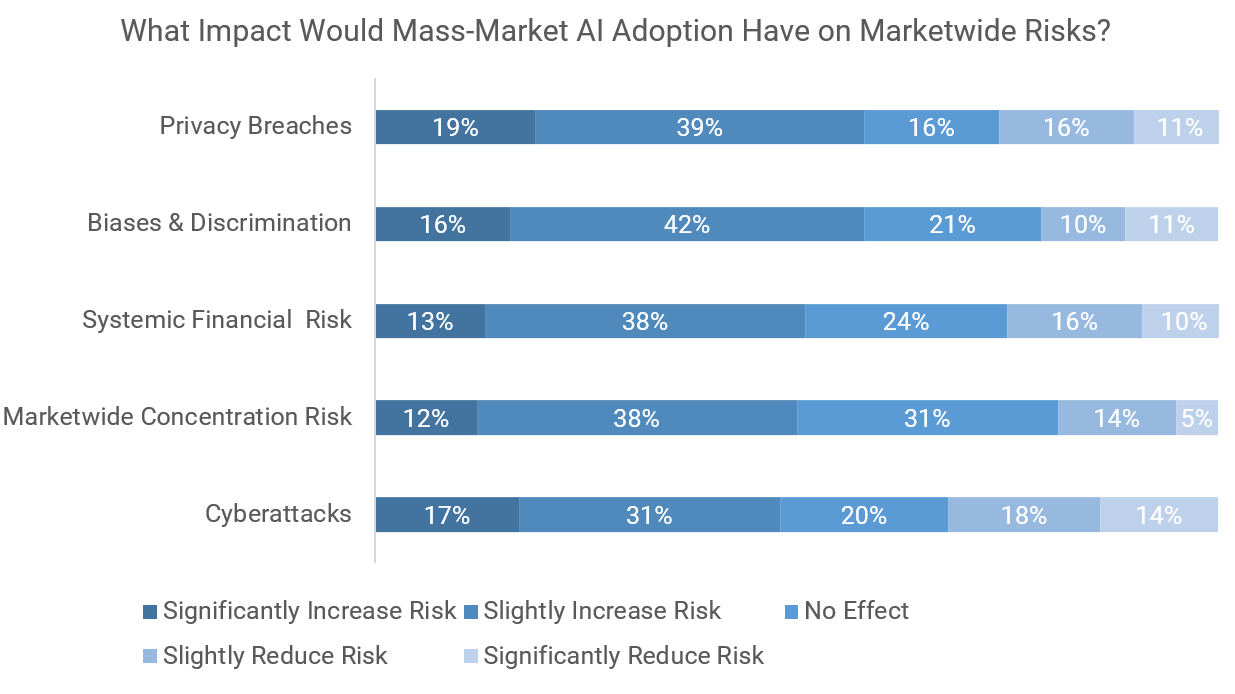

This reliance on data and programming creates the risks inherent in AI. First, the accumulation of huge troves of data creates a security vulnerability. A World Economic Forum survey of financial firms found that 58% of respondents believed that AI adoption would increase the risk of major privacy breaches. Hackers frequently target financial firms’ databases and major data breaches can be costly – they may lead to reputational damage and sizeable fines.

Source: Transforming Paradigms: A Global AI in Financial Services Survey. World Economic Forum.

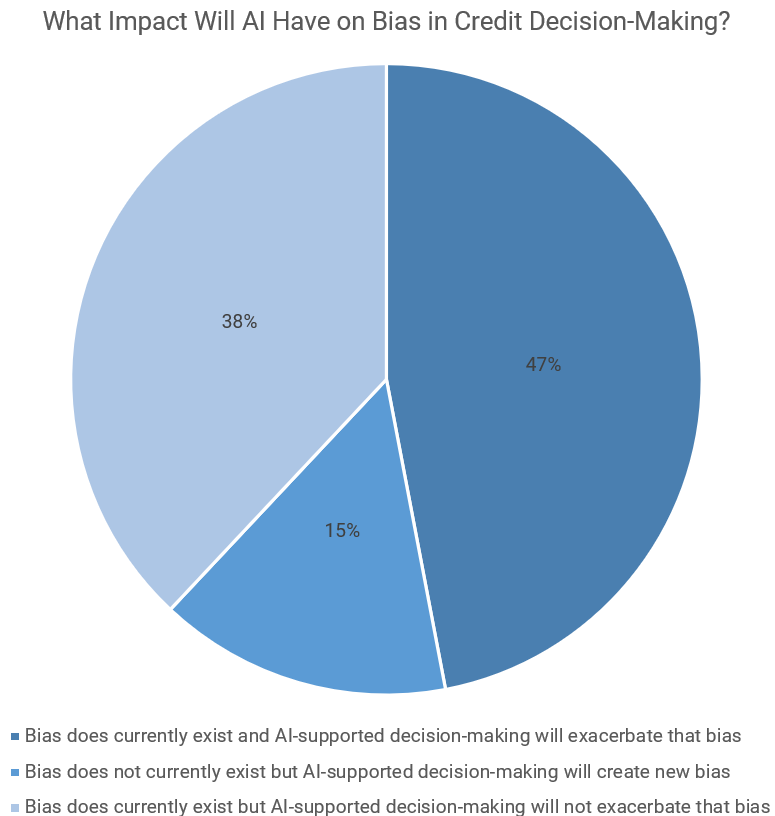

There is also a more insidious risk. By relying on programmers or existing pools of data to train AIs, firms risk baking existing prejudices into their algorithms. Indeed, 58% of respondents to the World Economic Forum survey expect AI adoption to increase the risk of bias and discrimination in the financial system.

Consider, for example, a hypothetical US bank creating an AI to evaluate mortgage applications. Taking a machine learning approach, the bank may use its historical mortgage approval data to teach the algorithm what a creditworthy applicant looks like. However, those historical mortgages were approved by humans and may display a pattern of bias against people of color, single female applicants, blue-collar workers, or the young. By using this data to train the algorithm, the bank would teach it to avoid such applicants in the future.

This creates two risks. First, the bank may risk losing out on a large pool of potential customers. If, for example, many young people or people of color moved into the neighborhood, they would find their applications for mortgages rejected by the AI and would use a different bank. Second, the bank may expose itself to the risk of complaints under anti-discrimination laws. If the government or consumers were able to show a systematic pattern of bias, the bank may face fines and other penalties.

A similar problem arises if, instead, the bank asks its mortgage team to work with programmers to create a set of rules for approving loans. In this case, the inherent biases of the programmers and employees would be baked into the algorithm.

Source: Transforming Paradigms: A Global AI in Financial Services Survey. World Economic Forum.

What can firms do?

Protecting against the risks posed by AI bias must be a priority for firms planning to use algorithms in decisions and processes that affect customers and employees. However, mitigating bias is not a simple task. One crucial step is ensuring that development teams are diverse. Research has shown that more-diverse programming teams build fairer and less biased algorithms.

Another important step is to prioritize identifying potential bias during testing. Test simulations should be designed to identify patterns of bias so that steps can be taken to address issues – such as biased data or instructions – that are causing problems.

Finally, it is important to ensure that bias is not introduced after launch. Well-designed AIs engage in constant learning, refining themselves over time. This process may introduce biases that were not present during initial testing. Thus, ongoing monitoring is needed to ensure good outcomes.

AI has the potential to transform the financial system, reducing errors, lowering costs, improving accessibility, and benefiting consumers. However, the issue of bias means that some consumers are at risk of being harmed by the adoption of AI. To protect themselves and their customers, firms must ensure that bias considerations form a core part of their AI development processes.

Intuition Know-How has a number of tutorials that are relevant to AI and other topics discussed by the above article. Click on the links below to view a taster video for that tutorial.

- Robotic Process Automation (RPA)

- Artificial Intelligence (AI)

- Data Analytics

- Risk – Primer

- Risk Management – An Introduction

- Credit Risk – An Introduction

- Credit Risk Customer Management – An Introduction